Welcome back to The Data Analyst’s LLM Masterclass series.

In Part 1, we stopped asking and started directing using the Command Framework. Now, we apply that framework to your daily reality.

You have your raw data. At this stage, you could use an LLM for many things—summarizing its contents, generating hypotheses, or suggesting enriched data sources.

But none of that matters if the data itself is garbage.

You know the cliché: we spend 80% of our time cleaning data and 20% complaining about it. It’s a cliché because it’s true. Data preparation is tedious, messy, and absolutely critical. It is a task governed by rules and procedures, results in predictable outcomes, and requires very little creativity.

It is the perfect candidate for AI automation.

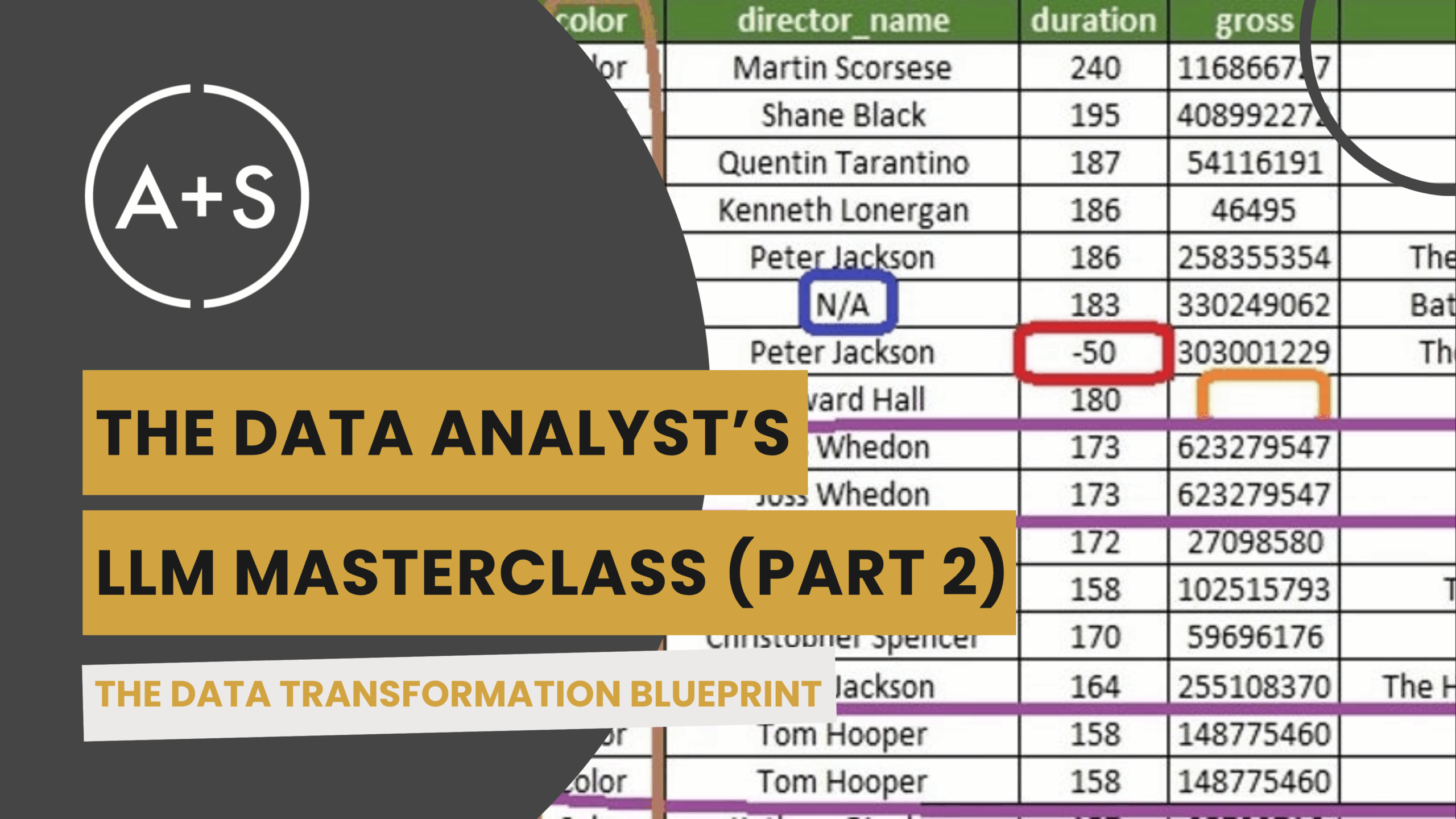

Yet, this is where most analysts fail. They upload a messy CSV and give a lazy command: “Clean this dataset.”

This is catastrophic. The LLM is eager to please. It will “clean” your data by making massive, undocumented assumptions. It will silently delete outliers that were actually critical signals. It will hallucinate standardizations. It turns messy data into wrong data.

You don’t need a generic search-and-replace. You need a surgical instrument.

The Core Concept

The mistake novices make is asking the LLM to be the cleaner. They paste data and ask for a fixed version back. This is unscalable and dangerous. You don’t know what rules the LLM applied, and it will fail on large datasets due to token limits.

Professional analysts don’t ask LLMs to clean data. Professionals ask LLMs to write code that cleans data.

Code is auditable. Code is repeatable. Code scales to millions of rows without hallucinating. We are not just asking for a clean CSV; we are asking for a Data Transformation Blueprint—an executable script that we can trust.

The Strategic Framework

To write a perfect Data Transformation Blueprint, we need to actively engage all three pillars of the Command Framework.

Pillar 1: Context (Role & Tool Selection)

First, define the Role. Begin by scoping the persona: “Act as an expert Data Engineer…”

Why Data Engineer? Because an Analyst interprets data, but an Engineer builds pipelines. When cleaning data, you need the rigid, systems-thinking mindset of an engineer.

Continue the role by specifying the tool you’ll use: “…specializing in [Python pandas | R tidyverse] data transformation.”

Which tool should you choose?

The one you can audit. While Python is slightly more popular for engineering pipelines, an LLM is equally proficient in R. If you can’t read Python well enough to spot a bug, ask for R. Never ask for code you cannot verify.

Next, the Context. I cannot stress this enough: Never ask an LLM to write code for data it cannot see.

It needs the schema and the messy patterns.

• Python users: Paste the output of df.info() and df.head(5) into the <context> tag.

• R users: Paste the output of str(df) or glimpse(df) and head(df, 5) into the <context> tag.

Pro Tip for Sensitive Data: If your first five rows contain PII (like actual emails or SSNs), obfuscate them before pasting. The LLM needs to see the format (e.g., that your emails are messy strings with extra spaces), not the actual secrets.

Pillar 2: Goal (Separating Logic from Engineering)

Stop using the word “clean” as your instruction. It’s too vague. You must bifurcate your goals into two distinct categories: the WHAT and the HOW.

1. WHAT: Transformation Requirements

These are your business rules. You are telling the LLM what must happen to the data.

• "Standardize 'Order_Date' column to YYYY-MM-DD format."

• "Impute missing values in 'Age' using the median."

2. HOW: Code Specifications

These are your technical engineering standards. You are telling the LLM how to write the script.

• "Write a [Python | R] script to transform this data." (Your primary command).

• "Include comments explaining non-obvious transformations (especially regex)."

• "Use vectorized operations; avoid iterating through rows for performance."The Debug Loop: Stop. LLM code will sometimes bug out—often due to a weird edge case in your data that wasn’t in the head() sample.

Don’t try to fix it yourself. Copy the entire error trace and paste it back into the chat with the command: “Fix this error. Do not change the processing logic, only fix the bug.”

Pillar 3: Rules (Constraints & Examples)

This is where you deliver on the promise of a “surgical” instrument.

The Constraints must prevent data loss. LLMs love to solve problems by deleting the difficult rows. Forbid this explicitly by writing a list of clear, actionable constraints:

<constraints>

• DO NOT delete any rows unless completely empty.

• If a value cannot be converted, log it to a separate ‘error_report’ dataframe rather than dropping it

</constraints>

The Examples are your superpower for messy text.

Under the hood, standardizing messy text usually requires ‘Regular Expressions’ (Regex)—complex pattern-matching code that is painful for humans to write.

Don’t try to write Regex manually. Don’t even try to describe it in paragraphs. Show it. You can even add a comment in the tag to explain the task.

<examples>

#Task: Standardize ‘Region’ column to 2-letter codes.

• Input: ‘Calif.’ -> Output: ‘CA’

• Input: ‘New York State’ -> Output: ‘NY’

• Input: ‘WA – Washington’ -> Output: ‘WA’

</examples>

The LLM looks at these simple pairs, deduces the underlying pattern, and writes the complex Regex for you. It’s the ultimate low-code hack.

The Analyst’s Playbook

Here is your template for the Transformation Blueprint that you can customize to fit your specific data transformation needs:

<role>

Act as an expert Data Engineer specializing in Python pandas data transformation.

</role>

<context>

• Target dataframe schema: [PASTE df.info() OR str(df) HERE]

• Sample data: [PASTE df.head(5) OR head(df, 5) HERE]

</context>

<instructions>

Write a complete Python script to transform this data.

#Task: Transformation Requirements (WHAT)

• Standardize ‘Order_Date’ to YYYY-MM-DD.

• Impute missing values in ‘Age’ using the median.

• Deduplicate based on ‘User_ID’, keeping the latest entry.

#Task: Code Specifications (HOW)

• Use vectorization where possible.

• Include comments for any regex used.

• Output the final cleaned dataframe as ‘df_clean’.

<constraints>

• DO NOT delete any rows unless they are completely empty.

• If a value cannot be converted (e.g., a bad date), coerce it to NaT/NA, do not drop the row.

</constraints>

<examples>

#Task: Standardize ‘Region’ column.

• Input: ‘west_coast’ -> Output: ‘West’

• Input: ‘East’ -> Output: ‘East’

• Input: ‘N. America’ -> Output: ‘North America’

</examples>

Final Thoughts

Data preparation is the foundation of your entire analysis. If your foundation is cracked because you used a lazy prompt, your sophisticated models later on will just be expensive noise generators.

Don’t let AI guess at your foundation. Give it a blueprint. Be surgical.

Next week, in Part 3, we’ll move past cleaning. We’ll use the LLM for Exploratory Data Analysis (EDA)—not just to generate charts, but to find the patterns you missed.

Keep Analyzing!