The three-part Command Framework is now your standard. You structure your prompts and demand role, context, and instructions, effectively ending generic LLM usage.

Last week, we treated the LLM as an Analytical Sparring Partner. We eliminated confirmation bias. The LLM presented you with a counter-hypothesis you must now test.

This week, we pivot to advanced applications. We address the professional turning point: It’s time to code the model.

Your success in Parts 1-3 forces this move. You have a clean dataset. You have a deep, bulletproof insight. Now you must build the statistical model required to formally validate the hypothesis or meet the business mandate.

The question is not if you are tempted to run a full black-box model; the question is: How do you execute a complex model with rigor and speed when the code structure is unfamiliar?

Your LLM is a powerful assistant. It is your expert modeler. You must guide it to construct the precise, auditable code required for advanced analysis, bridging your existing skill set and the statistically rigorous techniques you need to execute.

The Core Concept

Modeling is the necessary conclusion to a successful analytical argument.

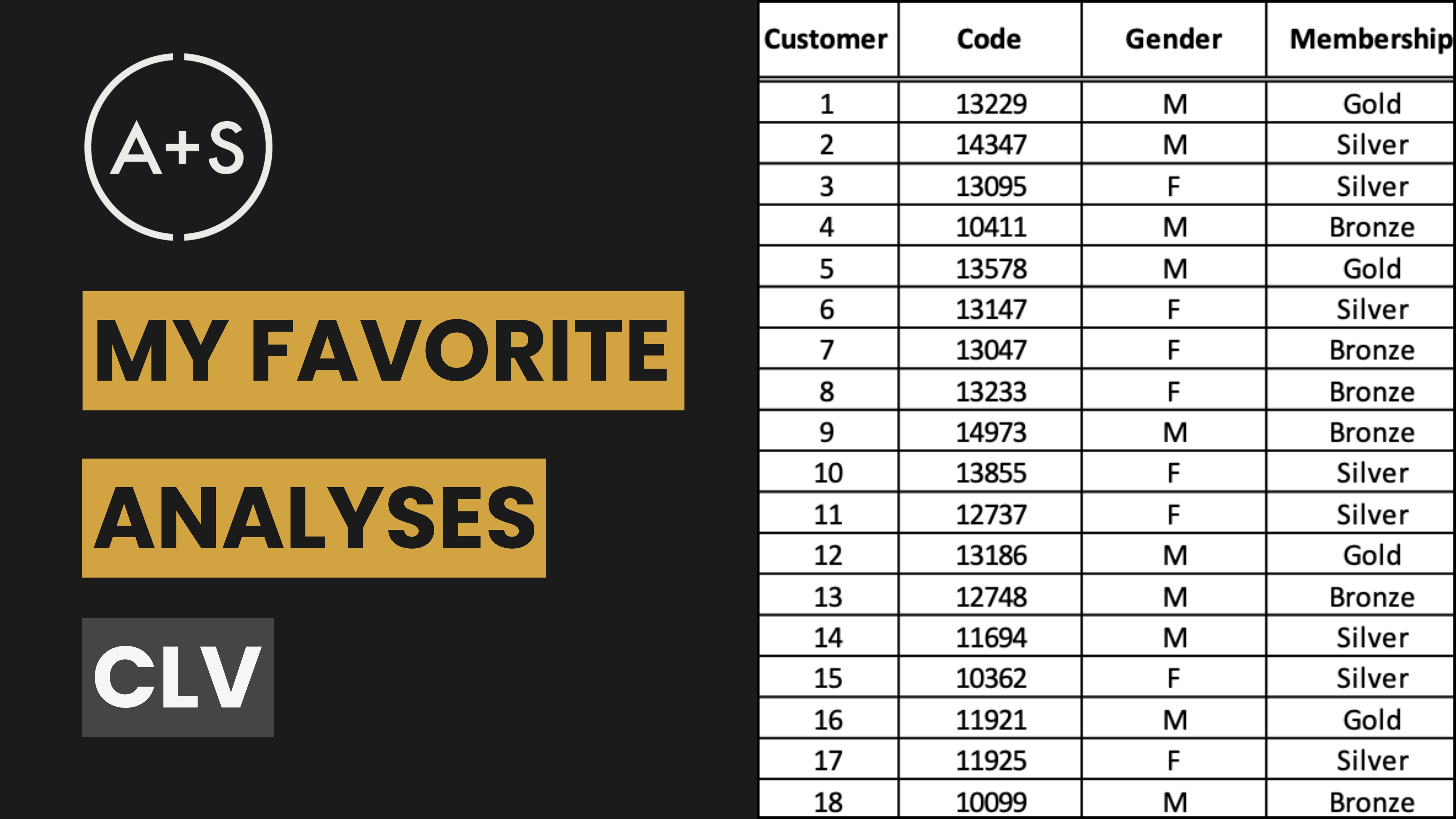

You are now required to execute. A colleague asks you to build a Survival Model. Your LLM Sparring Partner challenges you to build an “XGBoost classifier” (whatever that is!). Or maybe you just know you have to figure out a way to coax a Customer Lifetime Value calculation out of R.

This is the LLM’s highest value. It translates complex statistical ideas into production-ready code.

When you ask the LLM for a model, you are not asking it to think for you. You are asking it to provide the necessary framework: the diagnostic tests, the optimized search loops, and the final documentation strings.

If you skip this step, your argument collapses. A model built without pre-execution diagnostics is a structure without a foundation. A model without proper tuning is an expensive waste of compute time.

You use the Command Framework to impose Code Discipline. You command the LLM to break the statistical blueprint into its component parts. You demand verifiable code for each technical element. You command the execution. You maintain the strategic oversight.

The Strategic Framework

The disciplined execution rests on three pillars of code construction. These pillars ensure the model built is unassailable.

First, you command Code for Governance. You do not trust the data or the model. You command the code to check the underlying statistical assumptions of the model before execution. This ensures the model has a valid foundation. This step mitigates risk.

Second, you command Code for Efficiency. You do not accept wasteful processing. You command the LLM to use statistical intelligence to define smart, limited tuning for key parameters. This scales your model tuning efforts. This step optimizes resources.

Third, you command Code for Auditability. You do not write documentation after the fact. You command the LLM to generate the documentation and model notes alongside the final model code. This ensures the model is production-ready and fully explained.

This process guarantees intellectual control. It transforms the LLM from a generic copilot into a precision tool for statistical execution.

It’s Time to Code the Model

The power is in the command sequence. This is how you implement the three pillars using tried and tested code that you can adapt to your specific needs.

Command Prescriptive Diagnostics (Governance): Command the diagnostic code.

<role>

Act as a Statistical Governance Expert. Your goal is to verify the statistical fitness of the data.

</role>

<context>

I am using [MODEL TYPE, e.g., Linear Regression] and need to check if my [DATASET NAME] data violates a key assumption like [ASSUMPTION, e.g., independence of errors].

</context>

<instructions>

Write the complete [LANGUAGE, e.g., Python] code to perform a relevant statistical test (e.g., Durbin-Watson).

Clearly explain the test’s purpose and what a negative result (e.g., failure to reject null hypothesis) means.

</instructions>

Command Intelligent Tuning (Efficiency): Command the LLM to define the smart tuning space.

<role>

Act as a Model Optimization Expert. Your goal is to define the most efficient parameter search space.

</role>

<context>

I am building a [MODEL TYPE, e.g., Decision Tree] with [NUMBER] features. I need to prevent overfitting and ensure the tuning process is fast for a production environment.

</context>

<instructions>

Define a [LANGUAGE, e.g., R or Python] dictionary for a parameter search. Include [PARAMETER 1, e.g., tree depth] and [PARAMETER 2, e.g., regularization strength].

Provide only three, widely-spaced values for each parameter to ensure the search is efficient.

</instructions>

Command Full Model Code Generation: Now you execute the core task.

<role>

Act as a Python Engineer. Your goal is to produce a production-ready, reproducible model artifact.

</role>

<context>

Using the optimized parameters from Step 2, apply them to a final [MODEL TYPE] classifier. The training data is named ‘X_train’ and ‘y_train’.

</context>

<instructions>

Write a production-ready [LANGUAGE] script. The script must train the final model and print the validation [METRIC, e.g., Accuracy] and [METRIC 2, e.g., F1 score].

Use a library command to save the complete, trained model object to a file named [FILENAME, e.g., ‘production_model.pkl’].

</instructions>

Command Artifact Generation (Auditability): Command the final documentation.

<role>

Act as a Technical Writer. Your goal is to generate clear, compliant documentation for stakeholders.

</role>

<context>

The purpose of the model is [BUSINESS GOAL, e.g., churn prediction]. The final [METRIC] was [insert accuracy metric]. This Model Card will be used for regulatory review and deployment.

</context>

<instructions>

Draft the contents of a three-part Model Card in plain text: 1) Model Purpose, 2) Key Metrics (stating the [insert accuracy metric] result), and 3) A brief, non-technical explanation of why a [MODEL TYPE] was chosen over a simpler method.

</instructions>

Final Thoughts

Modeling is a strategic decision-making process. It is the moment the analysis becomes executable.

You use the LLM to generate structural code for every phase. You use your intellect to enforce the discipline. This ensures every assumption is validated and every result is earned.

Next week, we move to Part 5: LLMs for Data Storytelling & Visualization. We turn the validated model into a compelling narrative.

For now, impose the discipline. Keep Analyzing!