At some point in the (probably very) near future, Data Analysts will no longer be required to code in SQL, R, and Python to do their jobs.

Why?

The reason for this is simple: as Large Language Models (LLMs), such as ChatGPT, continue to evolve at a rapid pace, analytics tools will be able to operate efficiently through spoken prompts. This will allow for the same data analysis to be completed in less time compared to using coded commands. (If you haven’t seen how OpenAI is pushing the boundaries of NLP, computer vision, and AI do it now here.)

Is that a good thing?

Well, here things are a bit more complicated: The advancement of these technologies will enhance our productivity, and the difficulty in using them is diminishing (which is a positive thing). However, with increased accessibility comes the risk of placing powerful technology in the hands of analysts unprepared to wield them (which is the challenging aspect).

To guarantee that your use of LLMs for coding tasks is responsible and effective, follow these 5 essential tips.

1. Recognize the limitations of LLMs

LLMs, whether they are ChatGPT, Claude, Gemini, or any other, are not human coders. They can only perform as well as their training data allows. While they have been trained on SQL, R, and Python code, some of that code may be flawed. This means that the LLM may generate code that depends on outdated or nonexistent packages, or contains errors.

It is important to remember that a LLM is essentially an algorithm created to predict the next word in a sequence. Its sole purpose is prediction; it can only anticipate what token will follow in a chain of tokens. The LLM cannot guarantee the functionality of the code it generates. As such, expect to go through a process of trial and error when utilizing a LLM.

Additionally, all LLMs have a tendency to “hallucinate” and make things up. They may go off course or take unexpected turns even when everything seems to be going well. For example, I was writing an R script with ChatGPT when suddenly it switched over to Python. I simply had to remind it that I was writing R and it made the necessary adjustments.

This is why it’s important to carefully review the code produced by an LLM and not expect it to be perfect.

2. Have a basic understanding of SQL, R, or Python (whichever language you are coding)

When utilizing an LLM for coding, it’s crucial that you are not just a passive observer. While LLMs can assist, they cannot teach. They will follow your directions, but if those are incorrect or off-target, it can be difficult to get the LLM back on track.

Having a solid understanding of your programming language is essential in effectively directing the LLM. For example, importing data into an R environment may seem simple, but there are over 100 different commands across various packages based on factors like data size, format, and source.

Knowing the distinctions between basic commands – such as using read.csv for CSV files and read_excel for Excel data – allows you to provide precise information to the LLM with simple statements like “I have data in a CSV file,” leading to a smoother coding process.

Other important basics to grasp within programming languages include:

Commenting Best Practices

Commenting is crucial for effective coding. It is important to encourage the LLM to include comments throughout your code, particularly in more intricate tasks. These comments can provide helpful insights into the purpose of a function or the intended outcome of a complex SQL query, allowing you to give clearer direction to the LLM.

Libraries and Frameworks

Becoming familiar with the common libraries and frameworks for your programming language can greatly enhance your use of an LLM tool. In Python, knowing how to utilize Pandas for data manipulation, NumPy for numerical data, and Matplotlib for creating visualizations is essential. For R, packages such as ggplot2 for data visualization, dplyr for manipulating data, and shiny for building interactive applications are crucial. And in SQL, having a strong grasp on the fundamental “SELECT, FROM, WHERE” clauses is necessary for constructing efficient database queries.

Understanding Error Messages and Debugging

While LLMs can assist in identifying errors, having a strong grasp of common error messages in your chosen languages will greatly improve efficiency. For example:

-

In Python, a SyntaxError might indicate a typo or a missing element like a colon.

-

In R, an object not found error often means a variable hasn’t been defined.

-

In SQL, a missing keyword error usually points to syntax mistakes in your SQL queries.

Understanding these messages enables you to save considerable time and headache by correcting code yourself.

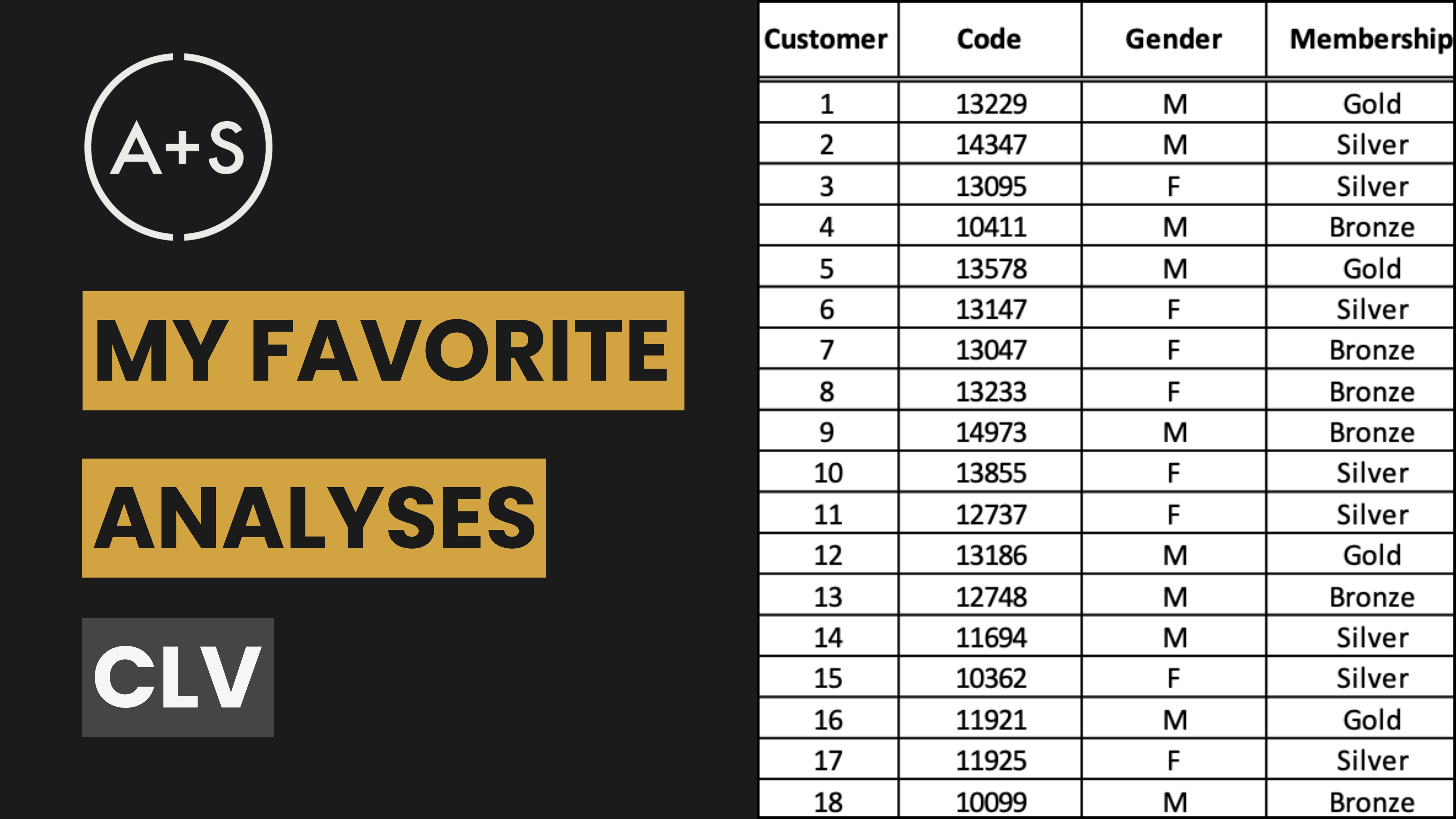

3. Know your data (and teach it to the LLM)

An LLM will not automatically have knowledge of your data. If you rely solely on it to interpret and understand each element in your data, you may encounter inaccuracies and mistakes. To avoid this, it is important to have a thorough understanding of your data and work closely with the LLM to ensure it has the same level of comprehension. I achieve this by making sure I am well-informed about each data element, including its definition and type, before uploading the dataset (or at least the first 100 lines for large datasets) and asking the LLM to generate a Data Dictionary. This includes:

-

Variable Name: A concise and descriptive name for each field or column in the dataset.

-

Variable Type: The type of data represented by each variable (e.g., integer, float, varchar, date).

-

Description: A detailed explanation of what each variable represents, along with any contextual information or nuances that may not be obvious.

-

Data Format/Pattern: If applicable, the expected format (e.g., YYYY-MM-DD for specific dates) or pattern (e.g., regex for validation) should be included.

-

Example Data: Including example entries for each variable can help users understand the type of data expected.

I review the output from the LLM and make any necessary corrections. Next, I add some more complex elements that the LLM may need guidance on. These include:

Allowed Values: For categorical variables, a list of possible values along with their meanings should be provided. Numerical variables should have range constraints specified.

-

Related Variables: Notes on how each variable relates to others in the dataset can be helpful, particularly for complex datasets.

-

Update Frequency: This indicates how often the data is updated (e.g., real-time, daily, monthly), providing insight into its freshness and relevance.

-

Required/Optional: Specifies whether a particular field is mandatory or can be left empty, which is important for data entry and validation processes.

-

Validation Rules: Any rules that must be followed when entering data in a field should be noted (e.g., positive numbers only, specific format required).

Finally, there are some elements that the LLM will not know. To prevent any erroneous information from being stored in its memory, I provide these elements myself if I have knowledge of them:

-

Source: Information on where the data comes from or how it is generated (e.g., method of collection, system name).

-

Data Steward: The person or role responsible for managing each data element. This contact information is crucial for inquiries about the data.

-

Security Classification: This indicates the sensitivity of the data, such as whether it is public, confidential, or classified, and guides how it should be handled.

-

Developing and maintaining a comprehensive data dictionary improves the accuracy and reliability of using data, simplifies the onboarding process for new team members, and supports effective data governance practices.

4. Clearly define the analysis you want done

Before beginning your analysis, it is important to have a solid plan in place. Depending solely on the LLM will only provide a surface-level understanding of the data. While this may be enough for basic analysis, the LLM is not suitable for tackling more complex questions. It is best to start by developing a hypothesis and identifying key questions that can help test it.

I find it helpful to give the LLM context – describing the situation, any complications that have arisen, and the question that needs to be answered. Then, I present my hypothesis and invite the LLM to share their insights, maintaining a logical progression in my introduction. After that, I outline the questions I plan to explore in order to test my hypothesis and request that the LLM assess them, ensuring they are well-structured and not overlapping. Once these questions are established, we can proceed with the analysis.

It’s important to keep in mind that the LLM is limited in its understanding of the whole picture; after all, it is just a machine. It needs clear instructions from you in order to understand your goals and objectives for the analysis. To the LLM, your code is simply a collection of words it has encountered in its training data – it cannot uncover hidden patterns in your data without proper direction from you.

For instance, let’s say you’re analyzing retail sales data that includes information on various store sizes and locations, some of which may have closed or reopened during the time period being studied. In order to gain a true understanding of this data, it’s crucial to normalize it (e.g. by sales per square foot or per capita). Without explicit guidance from you, the LLM will only provide surface-level insights.

As a human analyst, you are responsible for ensuring the accuracy and quality of the analysis because the LLM alone is not capable of doing so.

Step 5: Request code from the LLM in small increments

Even when the LLM has a comprehensive understanding of your data and understands the analysis you want to complete, it can easily become overwhelmed and make errors. Its complex algorithms and vast knowledge base make it a powerful tool, but also one that must be carefully managed. To combat this, it is crucial to break down your requests for code into manageable segments, creating a roadmap for completing your entire analysis.

This method guarantees that every step is carried out with precision and careful attention to detail, reducing the chances of errors or miscalculations. By breaking down the process into smaller tasks, you allow the LLM to concentrate on one task at a time, preventing overwhelm and promoting more precise outcomes. Since LLMs have a tendency to “hallucinate” or get sidetracked, it is crucial to keep them focused and ensure that their output aligns with your objectives.

While the LLM can assist in reducing errors, they may still occur. However, these mistakes can be used as learning opportunities to improve the LLM’s output. By providing specific error messages and copying them into the LLM prompt alongside a relevant message, it can help diagnose any issues and make necessary adjustments to the code. Keep repeating this process until the LLM successfully fixes the error. It’s important to note that there may be occasions where the suggested fix is not accurate, so persistence is key when inputting errors for the LLM to analyze and correct.

I have found it beneficial to constantly engage in a back-and-forth conversation with the LLM, using its responses to guide my subsequent questions. This technique can assist in customizing the LLM’s outputs to better suit your specific needs and improve the overall coding experience.

Conclusion

Incorporating these instructions enables individuals to utilize the complete capabilities of LLMs for coding in SQL, R, and Python with greater efficiency. This allows for a harmonious balance between the strength of these advanced tools and the essential supervision that can only be provided by a human.

Keep these 5 tips in mind for your next coding project when you have an LLM by your side:

-

Language Learning Models (LLMs) like ChatGPT, Claude, and Gemini are powerful tools, but they are not human coders and have limitations. They can produce buggy or outdated code and may get off track.

-

It’s crucial to have a basic understanding of SQL, R, or Python (depending on your programming language) to guide the LLM effectively. Knowing commands for various tasks and understanding error messages can save you considerable time.

-

LLMs don’t have automatic knowledge of your data. You need to ensure the LLM understands your data as much as you do, which can be achieved by creating a comprehensive Data Dictionary.

-

Before starting your analysis, have a clear plan and hypothesis in place. Giving the LLM proper context and explicitly instructing it can yield more meaningful insights.

-

Request code from the LLM in small increments to prevent overwhelming it and to maintain control over the process. This also allows for constant review and correction of the code.

Most importantly, keep in mind that an LLM can serve as a useful resource for coding in SQL, R, and Python. However, it is crucial to use it correctly and with human supervision to avoid any potential errors.